Data Solutions for AI/ML

Data Solutions for Traditional and Generative AI Model Development

Train and Deploy

Traditional and Generative AI Models with Confidence.

Innodata offers industry-leading data solutions to help you build, train, and deploy powerful Traditional and Generative AI models. With a global workforce, Innodata provides high-quality data for:

Prompt Engineering

RAG Development

Supervised Fine-Tuning

Red Teaming

Data Annotation

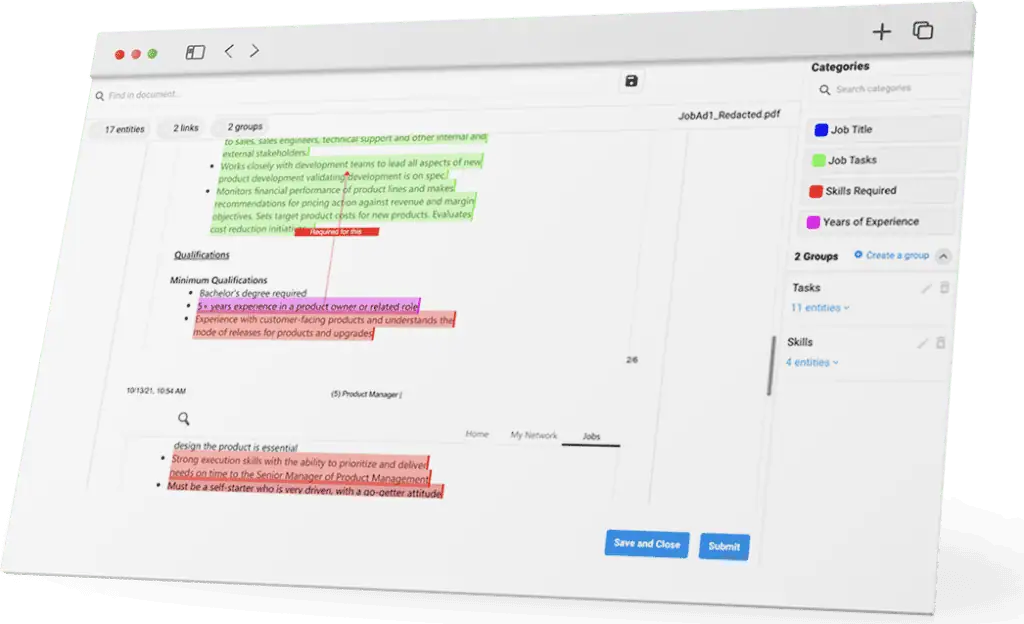

Fuel your traditional and generative AI/ML models with high-quality annotated training data. Our team of subject matter experts delivers accurate, reliable, and domain-specific data annotation services across all data types in 85+ languages.

Image, Video, & Sensor Annotation: From faces to places, power your visual-based and computer vision models with high-quality annotated data.

Text Annotation: Train your models with high-quality data annotated from the most complex text, code, and document sources.

Speech & Audio Annotation: Scale your audio-based AI/ML models and ensure model flexibility with diverse speech data in 40+ languages.

of a data scientist’s time is built building training datasets, according to a leading cloud computing enterprise.*

- Data Types:Image, video, sensor (LiDAR), audio, speech, document, and code.

- Expertise Across Industries:Healthcare, finance, insurance, law, agritech, retail, autonomous vehicles, logistics, manufacturing, aviation, defense, and more…

Data Collection & Creation

Let Innodata source and collect speech, audio, image, video, text, and document training data for generative and traditional Al model development. We support 85+ languages worldwide and offer customized data collection services to meet any domain requirements.

Whether you need natural data collection, studio data capture, or on-the-ground data gathering, Innodata delivers custom datasets tailored to your unique model training needs.

Additionally, develop LLM prompts with high-quality prompt engineering, allowing in-house experts to design and create prompt data that guide models in generating precise outputs.

of respondents in a recent survey said their organization adopted AI-generated synthetic data because of challenges with real-world data accessibility.*

- Data Types:Image, video, sensor (LiDAR), audio, speech, document, and code.

- Demographic Diversity:Age, gender identity, region, ethnicity, occupation, sexual orientation, religion, cultural background, 85+ languages and dialects, and more.

Supervised Fine-Tuning

Linguists, taxonomists, and subject matter experts across 85+ languages of native speakers create datasets ranging from simple to highly complex for fine-tuning across an extensive range of task categories and sub-tasks (90+ and growing).

of respondents in a recent survey said fine-tuning an LLM successfully was too complex, or they didn’t know how to do it on their own.*

- Sample Task Taxonomies:Summarization, image evaluation, image reasoning, Q&A, question understanding, entity relation classification, text-to-code, logic and semantics, question rewriting, translation…

- SFT Techniques:Change-of-thought, in context learning, data augmentation, dialogue…

Human Preference Optimization

Rely on human experts-in-the-loop to close the divide between model capabilities and human preferences. Improve hallucinations and edge-cases with ongoing feedback to achieve optimal model performance through methods like RLHF (Reinforcement Learning from Human Feedback) and DPO (Direct Policy Optimization).

of respondents in a recent survey said RLHF was the technique they were most interested in using for LLM customization.*

- Example Feedback Types:DPO (Direct Policy Optimization), Simple RLHF (Reinforcement Learning from Human Feedback), Complex RLHF (Reinforcement Learning from Human Feedback), Nominal Feedback.

Model Safety, Evaluation, & Red Teaming

Address vulnerabilities with Innodata’s red teaming experts. Rigorously test and optimize generative AI models to ensure safety and compliance, exposing model weaknesses and improving responses to real-world threats.

reduction in the violation rate of an LLM was seen in a recent study on adversarial prompt benchmarks after 4 rounds of red teaming.*

- Techniques:Payload smuggling, prompt injection, persuasion and manipulation, conversational coercion, hypotheticals, roleplaying, one-/few-shot learning, and more…

Data Annotation

Fuel your traditional and generative AI/ML models with high-quality annotated training data. Our team of subject matter experts delivers accurate, reliable, and domain-specific data annotation services across all data types in 85+ languages.

Image, Video, & Sensor Annotation: From faces to places, power your visual-based and computer vision models with high-quality annotated data.

Text Annotation: Train your models with high-quality data annotated from the most complex text, code, and document sources.

Speech & Audio Annotation: Scale your audio-based AI/ML models and ensure model flexibility with diverse speech data in 40+ languages.

of a data scientist’s time is built building training datasets, according to a leading cloud computing enterprise.*

- Data Types:Image, video, sensor (LiDAR), audio, speech, document, and code.

- Expertise Across Industries:Healthcare, finance, insurance, law, agritech, retail, autonomous vehicles, logistics, manufacturing, aviation, defense, and more…

Data Collection & Creation

Let Innodata source and collect speech, audio, image, video, text, and document training data for generative and traditional Al model development. We support 85+ languages worldwide and offer customized data collection services to meet any domain requirements.

Whether you need natural data collection, studio data capture, or on-the-ground data gathering, Innodata delivers custom datasets tailored to your unique model training needs.

Additionally, develop LLM prompts with high-quality prompt engineering, allowing in-house experts to design and create prompt data that guide models in generating precise outputs.

of respondents in a recent survey said their organization adopted AI-generated synthetic data because of challenges with real-world data accessibility.*

- Data Types:Image, video, sensor (LiDAR), audio, speech, document, and code.

- Demographic Diversity:Age, gender identity, region, ethnicity, occupation, sexual orientation, religion, cultural background, 85+ languages and dialects, and more.

Supervised Fine-Tuning

Linguists, taxonomists, and subject matter experts across 85+ languages of native speakers create datasets ranging from simple to highly complex for fine-tuning across an extensive range of task categories and sub-tasks (90+ and growing).

of respondents in a recent survey said fine-tuning an LLM successfully was too complex, or they didn’t know how to do it on their own.*

- Sample Task Taxonomies:Summarization, image evaluation, image reasoning, Q&A, question understanding, entity relation classification, text-to-code, logic and semantics, question rewriting, translation…

- SFT Techniques:Change-of-thought, in context learning, data augmentation, dialogue…

Human Preference Optimization

Rely on human experts-in-the-loop to close the divide between model capabilities and human preferences. Improve hallucinations and edge-cases with ongoing feedback to achieve optimal model performance through methods like RLHF (Reinforcement Learning from Human Feedback) and DPO (Direct Policy Optimization).

of respondents in a recent survey said RLHF was the technique they were most interested in using for LLM customization.*

- Example Feedback Types:DPO (Direct Policy Optimization), Simple RLHF (Reinforcement Learning from Human Feedback), Complex RLHF (Reinforcement Learning from Human Feedback), Nominal Feedback.

Model Safety, Evaluation, & Red Teaming

Address vulnerabilities with Innodata’s red teaming experts. Rigorously test and optimize generative AI models to ensure safety and compliance, exposing model weaknesses and improving responses to real-world threats.

reduction in the violation rate of an LLM was seen in a recent study on adversarial prompt benchmarks after 4 rounds of red teaming.*

- Techniques:Payload smuggling, prompt injection, persuasion and manipulation, conversational coercion, hypotheticals, roleplaying, one-/few-shot learning, and more…

Why Choose Innodata for Your AI/ML Initiatives?

Global Delivery Centers & Language Capabilities

Quick Turnaround at Scale with Quality Results

Domain Expertise Across Industries

With 5,000+ in-house SMEs covering all major domains from healthcare to finance to legal, Innodata offers expert annotation, collection, fine-tuning, and more.

Linguist & Taxonomy Specialists

Customized Tooling

Fuel Traditional and Generative AI with Innodata.

Data solutions for Traditional and Generative AI model development.